For OpenGL based renderers, LightsprintGL implements detection of direct illumination in rr_gl::RRSolverGL.

For Direct3D and custom renderers, you need to write your own implementation.

What needs to be detected

Imagine that you render static scene with only direct lighting/shadowing and all materials diffuse white.

We need to know average color of each triangle under such conditions.

We expect it in array of RGBA8 values, with length = number of triangles in static scene.

What to do with detected values

Call RRSolver::setDirectIllumination().

Why is it important

We have no other knowledge about your lights. You can use many light types with very complex lighting equations. You can arbitrarily change them. No problem. Just let us know what are the results - average colors produced by your shader.

How to implement it

Render whole static scene with white diffuse material, with direct lighting only.

Modify your shader so that instead of rendering triangles in 3d space, they are arranged in 2d matrix.

Scale down to get average triangle color.

Read texture back and you have an array of colors that can be sent to RRSolver::setDirectIllumination().

Possible implementation details

Rendering faces into 2d matrix requires one renderer enhancement - 2d position override - ability to render triangles to specified 2d positions while preserving their original look.

For DX10 generation GPUs, solution is nearly as simple as adding two lines into geometry shader. Render as usual, using any combination of trilist/strip/indexed/nonindexed data, but at the end of geometry shader, override output vertex positions passed to rasterizer by new 2d triangle positions calculated right there from primitive id.

For DX9 generation GPUs, implemention has two steps:

- Triangle positions in matrix are generated by CPU into new vertex stream. At the end of vertex shader, vertex position passed to fragment shader is replaced by position read from additional vertex stream.

- For purpose of detection, scene is rendered using non-indexed triangle list. This is necessary because if we want to render triangles with shared vertices to completely different positions in texture, we have to split that vertices.

Debugging

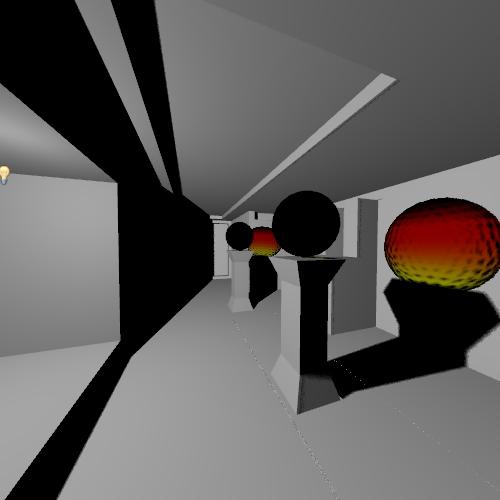

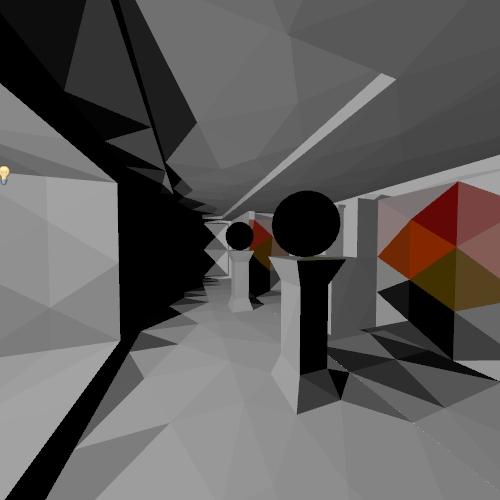

If you call rr_ed::sceneViewer(solver,...) after solver->setDirectIllumination() and then select menu item Help/Render DDI, scene viewer visualizes data sent to setDirectIllumination().

With "Global illumination/Indirect illumination" property at default "Fireball", it shows reference data produced by our implementation. After switching "Indirect illumination" to "none", it shows your data. Both results should be quite close, with only small noise-like differences.